Welcome to Flatland¶

Ongoing Challenge

Take part in the NeurIPS 2020 Flatland Challenge on AIcrowd!

Flatland is an open-source toolkit for developing and comparing Multi Agent Reinforcement Learning algorithms in little (or ridiculously large!) gridworlds.

Getting started¶

Using the environment is easy for both humans and AIs:

$ pip install flatland-rl

$ flatland-demo # show demonstration

$ python <<EOF # random agent

import numpy as np

from flatland.envs.rail_env import RailEnv

env = RailEnv(width=16, height=16)

obs = env.reset()

while True:

obs, rew, done, info = env.step({0: np.random.randint(0, 5)})

if done:

break

EOF

Make your first Challenge Submission in 5 minutes!

To learn more about Flatland, read how to interact with this environment, how to get started with reinforcement learning methods or with operations research methods.

Design principles¶

Real-word, high impact problem¶

The Swiss Federal Railways (SBB) operate the densest mixed railway traffic in the world. SBB maintain and operate the biggest railway infrastructure in Switzerland. Today, there are more than 10,000 trains running each day, being routed over 13,000 switches and controlled by more than 32,000 signals. The “Flatland” Competition aims to address the vehicle rescheduling problem by providing a simplistic grid world environment and allowing for diverse solution approaches. The challenge is open to any methodological approach, e.g. from the domain of reinforcement learning or of operations research.

Tunable difficulty¶

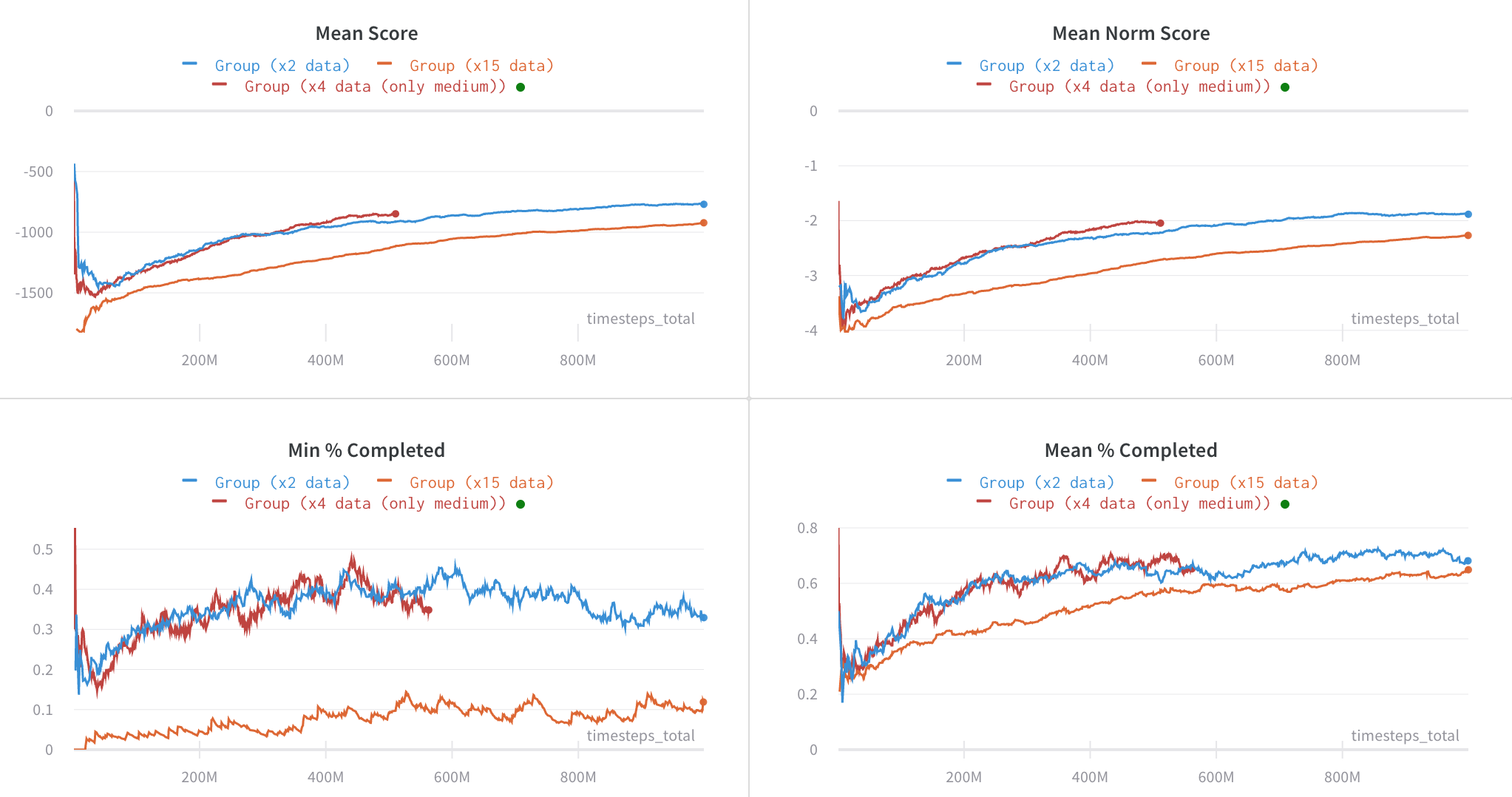

All environments support well-calibrated difficulty settings. While we report results using the hard difficulty setting, we make the easy difficulty setting available for those with limited access to compute power. Easy environments require approximately an eighth of the resources to train.

Environment diversity¶

In several environments, it has been observed that agents can overfit to remarkably large training sets. This evidence raises the possibility that overfitting pervades classic benchmarks like the Arcade Learning Environment, which has long served as a gold standard in reinforcement learning (RL). While the diversity between different games in the ALE is one of the benchmark’s greatest strengths, the low emphasis on generalization presents a significant drawback. In each game the question must be asked: are agents robustly learning a relevant skill, or are they approximately memorizing specific trajectories?

Research baselines¶

Flatland is a hard problem. We provide multiple research baselines to get you started.

Ape-X: Use a distributed prioritized DQN approach

PPO: Distributed Proximal Policy Optimisation

Imitation Learning: Learn from expert demonstrations

Next steps¶

Communication¶

Contributions¶

Read about the Contribution Guidelines for more details on how you can successfully contribute to the project. We enthusiastically look forward to your contributions.